Who Says Research Has to Be Boring?

“We are moving along the boundary of knowledge. We can access breakthrough research earlier than it is officially published and we can apply it to our products. We constantly shift our limits.”, says Marián Beszédeš, Head of Innovatrics R&D.

You are the head of the R&D team. What do you examine or develop?

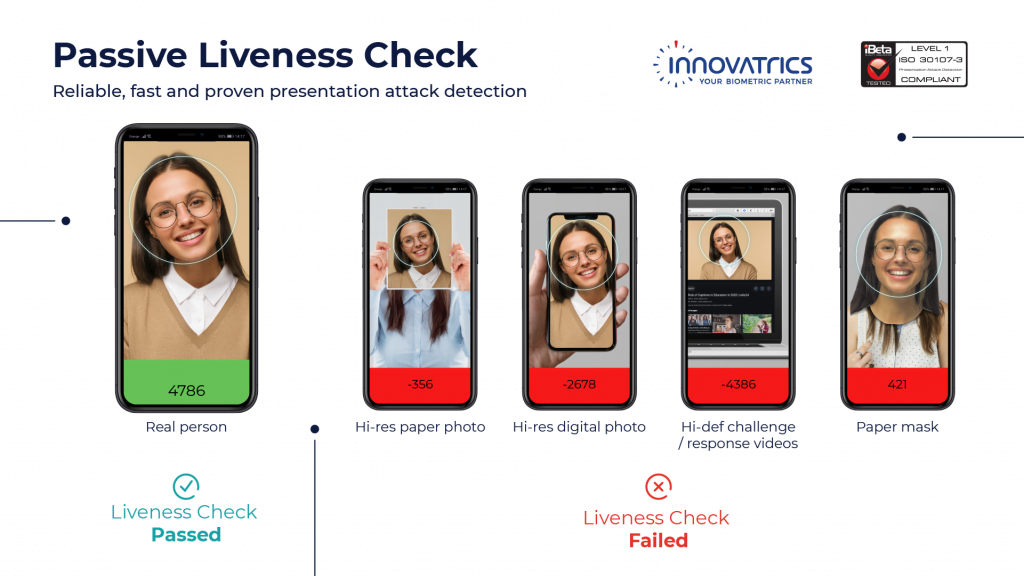

Here’s an concrete example of what we do – an internet banking mobile application that recognizes you are a living human and not just a photo, which some fraudsters sometimes use to cheat a bank. The solution is called facial liveness detection, which recognizes whether an image is a photograph, a statue, rubber mask, or a real person being scanned through the camera.

We work in three teams. The Face Recognition Team is focused on the overall research and development of methods in the area of facial recognition and their integration in the resulting IFace SDK software library. Another is the Computer Vision Team that focuses on the issues of defect detection in industrial production, the segmentation of fingerprints of a human hand, and detection of significant points in fingerprints. And we also have the Algo Team, which is focused on developing quick search algorithms and OCR – recognition of signs and small fingerprints which can be used in unlocking mobile phones. In all these three teams, we use the latest knowledge from machine learning, computer vision and deep neural networks.

Facial liveness detection sounds quite interesting. How do you teach an algorithm to ascertain from a single photo that I am a living human?

This cannot be done by only one person, of course. It’s a combination of work from many people in the team. First, we choose a suitable method from already published scientific works in magazines, books of conference contributions, or in benchmarks, or from direct participation at a scientific conference. Then, we study the selected method carefully until we understand it and we try to re-implement it in a prototype. We carefully test the prototype and discover its strong and weak points. Afterwards, we try to improve the method and integrate it in our solutions. It is basically work which most students do when preparing a well-researched technical thesis. Of course, it’s on a much bigger scale.

On a lighter note, do you think students who worked hard on their thesis and resisted the temptation to plagiarize are suited for this job?

Certainly. Everyone who wants to write their thesis honestly needs to study a certain method, understand and apply it. However, in the academe, similar to our case, applied research is made. But we go a bit further, we push the envelope of knowledge. We can integrate methods which have not yet been published officially and are only pre-prints, which happen quite often.

What happens after R&D ends?

Software libraries developed in R&D teams are taken over by other teams at Innovatrics or by our technologically-skilled customers, and are integrated afterwards into their products.

You have mentioned terms such as deep neural networks and machine learning. How do you use them in particular?

The tasks we deal with such as identifying faces in an image, recognizing if it is a woman or a man, estimating their age, recognizing text in a photograph of a document, or recognizing a fingerprint are too complicated to be resolved by conventional methods of image processing. The algorithms of machine learning of deep neural networks help us create a new specialized algorithm which will cope with such difficult tasks. We prepare input data and related requested outputs which we then hand over to precisely selected and set methods of machine learning. We are teachers, and deep neural networks are students, the correctness of whose answers we assess and mark, and the students try to score the best marks. However, you need a lot of data to train a deep network.

What data do you need as in the case of face liveness detection?

On the one hand, we need photos of real living faces, and on the other, we need photos of artificial ones – fake faces. It is easy to get living faces – for example, selfies which people take. Still, we need to provide the neural network with artificial faces which we need to create somehow. Due to that, we have printed several thousands of photos on paper. We have bought silicone masks which we wear on our faces to try to cheat the algorithm. We have also combined paper masks with wigs and glasses. We also create fake faces digitally – we digitally stick a face cut out of a live photo onto a real face. And that is still not enough. We multiply each photo – real or fake with small changes – by adding noise, blurring, rotating or shifting it a little. This is called data augmenting wherein a huge amount of data is generated in this manner.

What happens to the data then?

Visual data is used in the learning process where it is transformed into our software in the form of trained neural networks. This is a great leap in software development as it is no longer written by a programmer but by a learning algorithm of a neural network. Some experts call it Software 2.0. Due to that, it is necessary to change not only thinking in software development but also the internal infrastructure. Data needs to be identified with versions, filtered, merged, and cleaned. And, of course, it need not be only faces; for example, we have also made detection of damage to asphalt on the roads and iris biometry. There is simply a huge volume of data and we need to create meaningful datasets to train specialized neural networks. So, in addition to a researcher and developer, now we also need a data specialist who will look after all such information.

What about improving fingerprint biometrics?

Our Computer Vision team is currently trying to apply deep neural network technology even in fingerprints. They help us look for more precise segmentations of fingers and also positions and types of individual minutiae, so-called minutiae points. We want to drive out the historical technology to get a faster and more accurate solution.

What happens when all these is accomplished?

We will continue. Technology is developing very quickly. The facial biometric solution which we submitted last August to a global benchmark – the Face Recognition Vendor Test prepared by NIST (National Institute for Standards and Technology, USA) was ranked twelfth. We have a chance to improve our solutions and correct mistakes, which is great because our focus is on the product rather than not on the project. This year was again very successful, and we ranked thirteenth. We constantly need to improve.

What does continuous improvement mean? For example, ordinary people experienced difficulties with facial biometrics when face masks started to be worn and their mobile phones could not identify them when they tried to pay in shops.

During the last half-year, we also worked on improving the precision of identifying faces wearing face masks. IFor utmost precision, we need the solution to be even faster. And, if we achieve this, we will want it to work on cheaper devices as well such as industrial cameras. We also added new software features like detection of irises, faces, bodies, etc. It is a never-ending process.

How many people work on all those tasks?

There are 15 of us in total. Most of us (eight) belong to the Face Recognition team. Most of us are versatile – we can read an article, make a prototype, and integrate and optimize it. These are the people who have several years of experience in our team. Now we are looking for at least three more people who we are ready to be trained, namely for the positions: Computer Vision/Machine Learning Engineer and Image Datasets Processing Owner/Developer. Suitable candidates should have analytical skills and experience with programming in Python. Experience with biometrics is an advantage. For the Algorithm team, we are looking for an Algorithm Developer who should know C++ language and have excellent orientation in algorithmization and mathematical models. By the way, people in our company constantly learn and move forward. Each of us has a chance to attend an international conference during the year. When new people join us, we recommend them courses which may help them improve at work. The company pays for the courses. And, of course, we have team-building events where we are always happy to celebrate our joint achievements.